All Categories

Featured

Table of Contents

Amazon now typically asks interviewees to code in an online record file. Yet this can differ; maybe on a physical white boards or an online one (Top Challenges for Data Science Beginners in Interviews). Check with your employer what it will be and exercise it a lot. Since you recognize what concerns to anticipate, allow's concentrate on exactly how to prepare.

Below is our four-step prep plan for Amazon data researcher candidates. If you're planning for more business than simply Amazon, then examine our general information scientific research meeting preparation overview. The majority of prospects fail to do this. Yet prior to investing tens of hours planning for a meeting at Amazon, you need to spend some time to see to it it's in fact the appropriate business for you.

Exercise the approach using example questions such as those in section 2.1, or those about coding-heavy Amazon settings (e.g. Amazon software development engineer meeting guide). Practice SQL and shows concerns with tool and hard level instances on LeetCode, HackerRank, or StrataScratch. Take a look at Amazon's technological subjects web page, which, although it's designed around software program growth, need to offer you an idea of what they're keeping an eye out for.

Keep in mind that in the onsite rounds you'll likely have to code on a white boards without being able to perform it, so exercise writing with problems on paper. Offers complimentary training courses around introductory and intermediate equipment learning, as well as information cleaning, data visualization, SQL, and others.

Tools To Boost Your Data Science Interview Prep

Make certain you have at least one story or example for each and every of the principles, from a large range of settings and projects. Finally, a great way to practice all of these different kinds of concerns is to interview yourself out loud. This might seem unusual, however it will considerably improve the means you interact your answers throughout an interview.

Trust fund us, it works. Practicing on your own will only take you thus far. Among the primary challenges of information scientist meetings at Amazon is connecting your various responses in a manner that's very easy to comprehend. Because of this, we strongly suggest exercising with a peer interviewing you. Preferably, a great location to begin is to practice with close friends.

Nevertheless, be warned, as you may meet the complying with issues It's hard to recognize if the comments you obtain is precise. They're unlikely to have expert expertise of interviews at your target company. On peer platforms, people typically lose your time by disappointing up. For these reasons, numerous candidates skip peer simulated meetings and go right to mock interviews with a professional.

Faang Data Science Interview Prep

That's an ROI of 100x!.

Traditionally, Information Scientific research would certainly concentrate on mathematics, computer system scientific research and domain name expertise. While I will quickly cover some computer system science basics, the bulk of this blog site will primarily cover the mathematical basics one may either need to comb up on (or even take a whole program).

While I comprehend the majority of you reading this are a lot more mathematics heavy naturally, realize the mass of data scientific research (risk I state 80%+) is gathering, cleaning and processing information right into a helpful type. Python and R are the most prominent ones in the Data Scientific research space. I have likewise come throughout C/C++, Java and Scala.

Pramp Interview

It is typical to see the bulk of the data researchers being in one of 2 camps: Mathematicians and Database Architects. If you are the second one, the blog site won't aid you much (YOU ARE ALREADY AWESOME!).

This might either be collecting sensor information, analyzing web sites or executing surveys. After gathering the data, it requires to be changed right into a functional kind (e.g. key-value shop in JSON Lines documents). As soon as the data is gathered and placed in a functional format, it is necessary to carry out some data quality checks.

Interview Prep Coaching

Nonetheless, in situations of fraud, it is very typical to have heavy class discrepancy (e.g. only 2% of the dataset is real scams). Such info is necessary to choose the appropriate choices for attribute design, modelling and model analysis. For more info, check my blog site on Scams Detection Under Extreme Class Discrepancy.

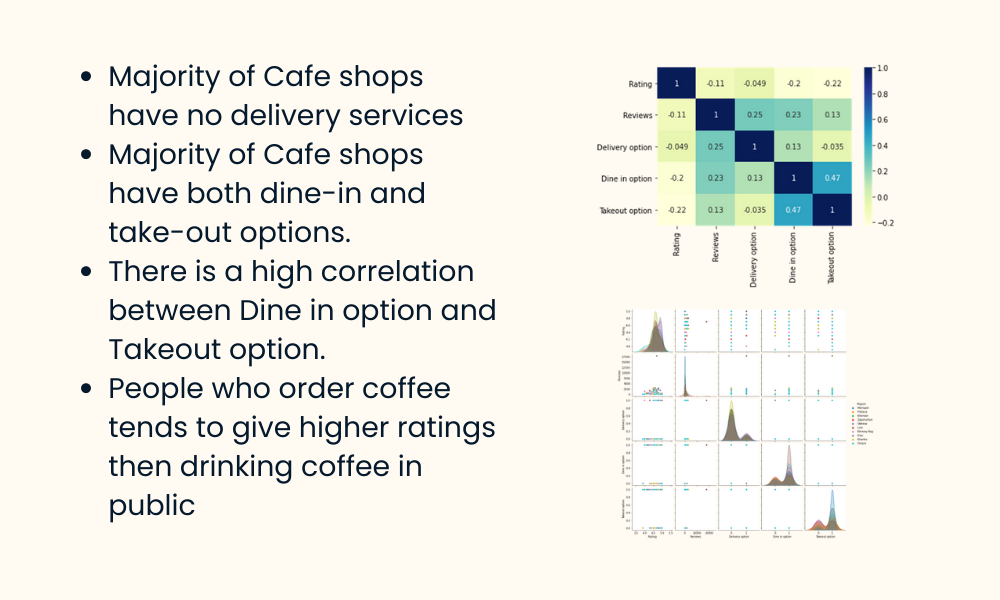

In bivariate analysis, each attribute is compared to various other functions in the dataset. Scatter matrices enable us to locate hidden patterns such as- attributes that must be engineered together- functions that might require to be gotten rid of to avoid multicolinearityMulticollinearity is really a concern for numerous designs like straight regression and therefore requires to be taken care of appropriately.

Envision using internet use data. You will certainly have YouTube users going as high as Giga Bytes while Facebook Carrier customers use a couple of Mega Bytes.

Another problem is the use of specific values. While categorical worths are common in the data science world, realize computer systems can only understand numbers.

Coding Practice

At times, having too several sporadic measurements will obstruct the performance of the model. An algorithm frequently made use of for dimensionality decrease is Principal Components Analysis or PCA.

The usual classifications and their below categories are discussed in this section. Filter approaches are normally made use of as a preprocessing action. The option of functions is independent of any type of maker discovering formulas. Instead, functions are picked on the basis of their scores in numerous analytical examinations for their correlation with the end result variable.

Common techniques under this category are Pearson's Relationship, Linear Discriminant Analysis, ANOVA and Chi-Square. In wrapper methods, we attempt to make use of a subset of functions and train a version using them. Based on the reasonings that we draw from the previous model, we decide to add or eliminate features from your part.

Mock Tech Interviews

These methods are normally computationally extremely pricey. Typical methods under this classification are Ahead Option, Backward Removal and Recursive Attribute Removal. Installed approaches combine the high qualities' of filter and wrapper techniques. It's carried out by algorithms that have their very own integrated feature choice methods. LASSO and RIDGE are typical ones. The regularizations are given in the equations below as recommendation: Lasso: Ridge: That being claimed, it is to recognize the auto mechanics behind LASSO and RIDGE for meetings.

Supervised Knowing is when the tags are available. Without supervision Discovering is when the tags are inaccessible. Obtain it? Manage the tags! Word play here meant. That being stated,!!! This mistake is sufficient for the recruiter to terminate the interview. Likewise, one more noob mistake people make is not stabilizing the functions prior to running the version.

Hence. Guideline. Linear and Logistic Regression are one of the most basic and generally made use of Device Discovering algorithms around. Prior to doing any kind of analysis One common meeting blooper individuals make is starting their analysis with an extra complex version like Semantic network. No question, Semantic network is very accurate. Nonetheless, standards are essential.

Table of Contents

Latest Posts

Tech Interview Handbook: A Technical Interview Guide For Busy Engineers

How To Own Your Next Software Engineering Interview – Expert Advice

Jane Street Software Engineering Mock Interview – A Detailed Walkthrough

More

Latest Posts

Tech Interview Handbook: A Technical Interview Guide For Busy Engineers

How To Own Your Next Software Engineering Interview – Expert Advice

Jane Street Software Engineering Mock Interview – A Detailed Walkthrough